Mistral AI, the French unicorn startup, has launched its new language model Mixtral 8x7B, based on the 'Mixture of Experts' approach. This multilingual model outperforms Llama 2 70B and GPT-3.5 on several benchmarks, offering fast performance and optimized resource management.

French startup Mistral AI, recently valued at €1.86 billion after raising €385 million, has announced the launch of its new language model, Mixtral 8x7B. This model, built using the "Mixture of Experts" approach, promises impressive performance.

Impressive Valuation and Cutting-Edge Model

Just weeks after unveiling its first large language model, Mistral AI has officially become a unicorn. Its new model, Mixtral 8x7B, is said by the company to be the most powerful open-source language model to date. It outperforms Llama 2 70B on most benchmarks, with inference speeds six times faster. It also matches or surpasses GPT-3.5 on several criteria.

Mixtral is a multilingual model, capable of understanding English, French, Italian, German, and Spanish. It excels in programming and can handle a context window of 32,000 tokens (approximately 25,000 words), comparable to GPT-4.

The "Mixture of Experts" (MOE) Approach

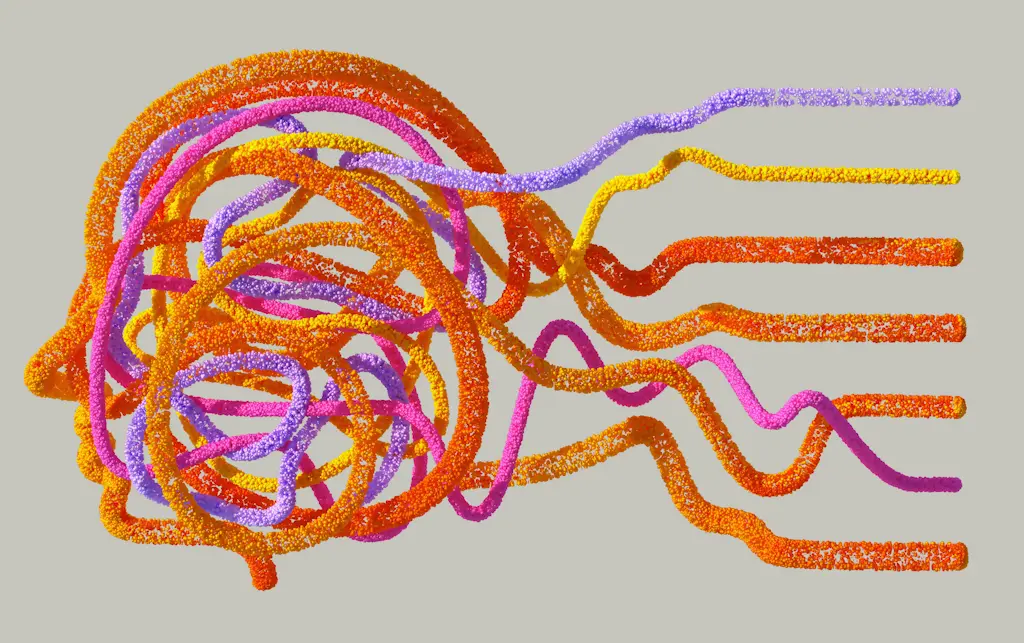

Mixtral relies on the "Mixture of Experts" (MOE) approach, which increases the number of parameters while controlling cost and latency. The model consists of 46.7 billion parameters, but only 12.9 billion parameters are used per token, optimizing resources.

In the MOE architecture, distinct neural networks called "experts" process different subsets of training data. A gateway network orchestrates the distribution of tokens to the most relevant experts for each task. This setup allows for much more efficient pre-training in terms of computation and enables faster inference than other models with the same number of parameters.

A Promising Innovation for AI

Mistral AI continues to stand out in the AI startup world with a model that redefines resource management while enhancing performance. Mixtral represents a major step in the evolution of language models and promises to revolutionize the use of artificial intelligence.

Source : ICTJournal